- What Is Airflow?

- How Is Airflow Used?

- Why Not Use a CronJob?

- What Is Airflow: The Features You Should Know

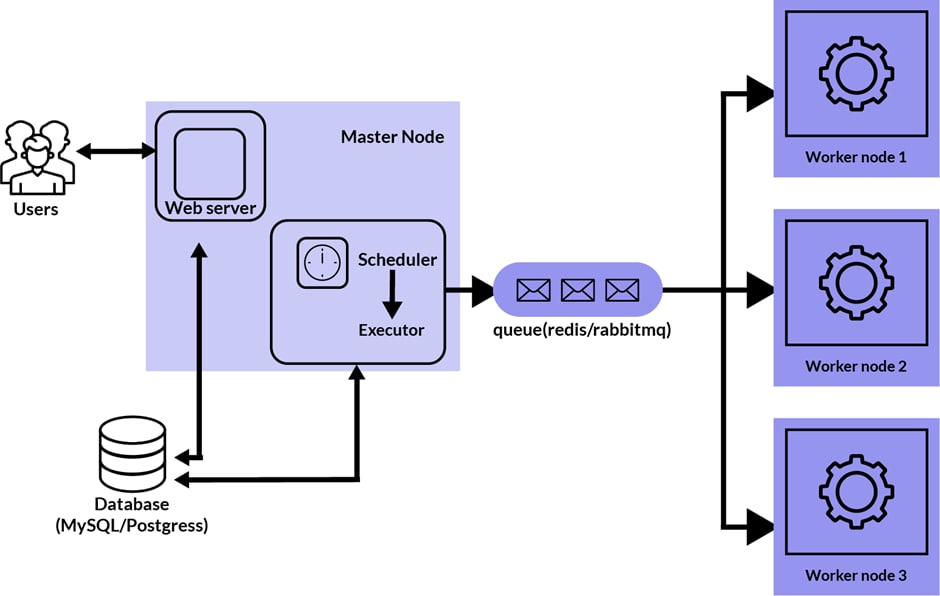

- Apache Airflow Architecture

- Airflow Pros and Cons

- The Core Concepts of Apache Airflow

- A Quick Example of a Typical Airflow Code

- Creating Your First DAG: A Step-by-Step Guide

- Testing the DAG

- Cloud Solutions (MWAA vs. Google Cloud Composer vs. Astronomer)

- The Best Practices for Using Airflow

- Need IT Consulting Help?

- Wrapping It All Up

Automation has become a pillar of higher functional efficiency and productivity, regardless of industry. So it’s no wonder smart business owners are trying to leverage automated systems and tools for their daily work processes.

However, many still do not understand how to automate some tasks and end up in a loop where they manually carry out the same tasks repeatedly.

For them, this introduction to Apache Airflow can come in handy.

Apache Airflow is an excellent tool to automate your business operations, and we’ll show you why. In this article, we walk you through an in-depth Apache Airflow overview, including how to install it, create a workflow, and code it in Python.

In this article:

What Is Airflow

Apache Airflow is an open-source tool that allows you to create, schedule, and oversee workflows within your organization. In other words, this software can help you visualize and track your data pipeline’s progress, task dependencies, trigger tasks, logs, and success status.

With this tool, you can design your work roadmaps as Directed Acyclic Graphs (DAGs) of tasks. This allows an impressive bird’s-eye view of your data flow, making it easier to monitor workflows and quickly spot issues in the pipeline.

By using Python programming language and data engineering, this software allows you to define your pipeline, execute bash commands, and use external modules like pandas, sklearn or Google Cloud Platform (GCP), or Amazon Web Services (AWS) libraries to manage cloud services and more.

It’s no wonder Apache Airflow is one of the most widely-used platforms among data science experts who want to orchestrate workflows and pipelines. For instance, companies like Pinterest, GoDaddy, and DXC Technology have leveraged Airflow to solve their performance and scalability problems.

In addition to its DAG offerings, Apache Airflow also connects seamlessly with various data sources and can send you alerts on completed or failed tasks via email or Slack.

How Is Airflow Used

Apache Airflow can be critical for:

Looping to defined pipelines

- Organizing periodic job processes that have complex logic in an easily digestible tree view

- Orchestrating complex data pipelines over data warehouse and object stores

- Running non-data-related workflows

- Creating and managing scripted data pipelines as code (Python), i.e., it is a code-first platform

Why Not Use a CronJob?

While a CronJob is another appealing option for task management, it doesn’t meet the needs for scalability and other pain points. Here are a few more reasons CronJobs may not be your best option for managing your operations network.

Changes to the CronTab File Are Not Easily Traceable

While the CronTab file keeps a schedule of jobs that need completion across several projects, the file does not track in-source control or integrate into the project deployment process. In other words, there’s no machine learning.

When your employees make edits to scheduled jobs, the DAG file does not record these edits across time or dependent projects.

Job Performance Is Not Transparent

Another issue is how a CronJob keeps its list of job outputs. The tool logs job outputs on the servers where each job was completed and not in a centralized location. As a result, workers cannot tell if a job was successful or failed, creating issues for teams lower in the workflow pipeline.

Rerunning Jobs That Failed Is Ad Hoc and Difficult

As a result of Cron’s limited environment variables set, you’ll find that the bash command in the CronTab file does not give the same output as their terminal—possibly due to the absence of bash profile settings in the Cron environment. As a result, developers have to build all the dependencies of the user’s environment before they can execute a command.

What Is Airflow: The Features You Should Know

Here are some of the most appealing features of Apache Airflow:

- Easy to use. You don’t have to be a programming expert to use Airflow. As long as you have some basic Python code knowledge, you’ll typically be able to deploy workflows using Apache Airflow without stress.

- Open-source. Apache Airflow is free, publicly accessible, and has millions of active users.

- Robust integrations. Another shining point for Apache Airflow is the number of integrations it supports. You can work with several third-party software, from Amazon AWS to Google Cloud Platform, Microsoft Azure, and more.

- Requires only standard Python to code. Using the Python programming language, you can create simple and complex workflows with impressive scalability and flexibility options.

- Amazing user interface. Apache Airflow’s intuitive user interface is another excellent feature of this tool. The platform provides an easy-to-use interface for viewing your DAGs and managing your workflows—from ongoing to completed tasks.

If you’re wondering how Airflow works, the first step is understanding the tool’s modular architecture.

Apache Airflow Architecture

Here are the four components that interact to give you the robust workflow scheduling platform that is Apache Airflow:

Here are the four components that interact to give you the robust workflow scheduling platform that is Apache Airflow:

Scheduler

The Apache Airflow scheduler is responsible for tracking all DAGs and their related tasks. This means the tool’s scheduler will initiate a task when all its dependency criteria are met. Also, it will periodically check each active task for initiation and progress reports.

Apache’s scheduler also stores vital workflow information, such as running tasks, operation statistics, and schedule intervals. This makes it easier to track and control flow.

Web Server

This is where all the user interaction happens. The web server is Airflow’s interface that displays task status and allows users to interact with the tool's database. Users can also go through project log files on cloud storage drives—Google Cloud Storage, Microsoft Azure blobs, S3, etc.—through the web server.

Database

This represents the local system storage for DAGs and their connected tasks, where the scheduler can always access real-time information and the metadata from data processing.

Apache Airflow utilizes Object Relational Mapping (ORM) and SQLAlchemy to connect its scheduler and metadata database.

Executor

The executor is the component of Apache Airflow that highlights exactly how the task will get done. Therefore, there are four types of executors in Airflow that come to play depending on the task at hand:

- SequentialExecutor. A sequential executor can run only one task per time, as workers and the scheduler run on the same machine.

- LocalExecutor: It is similar to SequentialExecutor, but it can run multiple tasks at the same time.

- CeleryExecutor. The CeleryExcecutor comes in handy when running distributed asynchronous Python tasks. However, it has a limited number of workers that can pick up scheduled tasks.

- KubernetesExecutor. This executor runs each task in a unique Kubernetes pod. As a result, it allows maximum resource distribution and usage as the KubernetesExecutor can create worker pods on demand.

Here’s a brief introduction to Apache Airflow architecture operations:

- First, you build a DAG workflow containing the tasks, their order, and dependencies. Then, Airflow evaluates the DAG and creates a run based on the scheduling in the metadata database. At this point, the tasks are scheduled.

- Next, the scheduler questions the database, retrieves tasks, and sends them to the executor, thereby changing their status to queued. The executor then pushes the task to the workers, and the task status changes to running.

- Once a task is completed, it will be marked as either successful or failed. Finally, the scheduler will update its final status in the database backend.

Airflow Pros and Cons

No doubt, Apache Airflow provides its users with a long list of benefits. But, it does have some drawbacks, too. Let us explore the pros and cons of this tool:

Pros

- It supports over 1,000 contributors.

- It offers impressive scalability and functionality.

- It supports extensibility with several common external systems.

- It features dynamic pipeline generation.

- It combines seamlessly with various cloud environments.

- It is an open-source tool.

- It provides you with several options for monitoring data pipelines.

- You can programmatically author any code for what you want the platform to execute.

Cons

- Apache Airflow has a steep learning curve due to its Python code dependability.

- Sharing data from one task to the next can be very challenging.

- It is impossible to monitor data quality in Airflow pipelines.

- Debugging can take too much time.

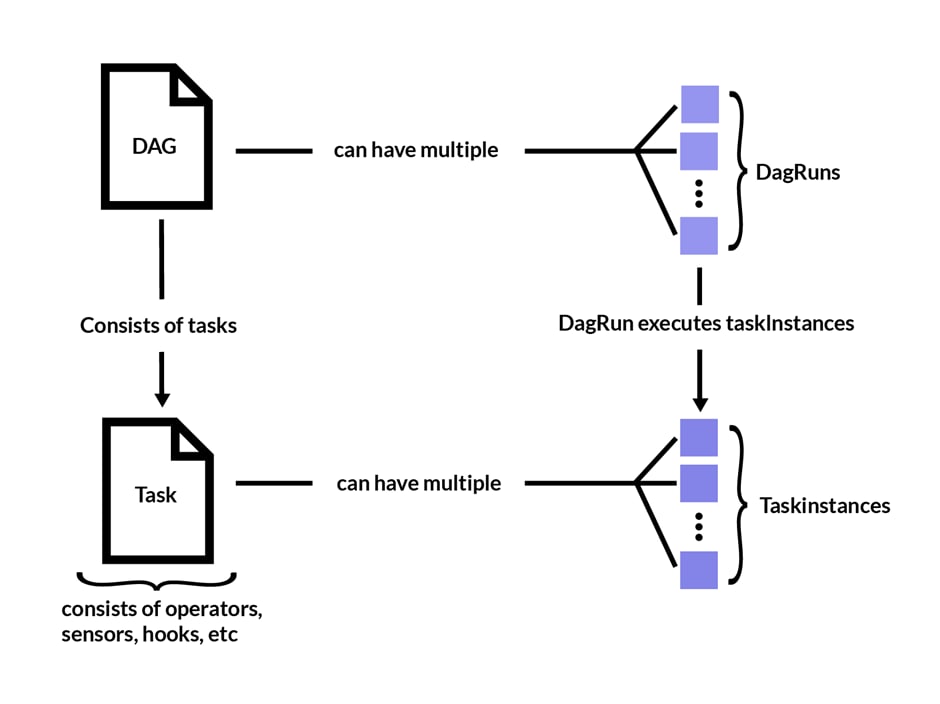

The Core Concepts of Apache Airflow

Here is an introduction to some core concepts you’ll encounter when using Apache Airflow.

DAG

Directed Acyclic Graph, or DAG, represents a data workflow or pipeline depicted by a graph view, where nodes do not self-reference and all dependencies are directed. DAGs in Airflow are defined in the Python code.

- Directed. If you have several tasks with dependencies, each must have at least one upstream and downstream task.

- Acyclic. Tasks cannot create or load data with self-references, guarding against the possibility of infinite loops.

- Graph. Each task has a logical structure in terms of clear relationships with other tasks.

Tasks

The basic concept behind tasks is that they are nodes in a DAG describing a unit of work. They are created by the user and can vary in complexity and duration.

Airflow Operators

These are the foundation of the Airflow environment and determine what tasks get done. They are products designed to undertake specific actions and can take the form of function-accepting parameters.

Each operator corresponds to one task in your Airflow task, and they are three types:

Action operators – execute a function.

- Transfer operators – transfer data from its source to its destination.

- Sensor operators – stand by until something happens.

Hooks

Airflow is a platform that supports many third-party system interactions. This is where hooks come into the picture. Hooks let you connect to external databases and APIs such as S3, MySQL, Hive, GCS, etc.

Relationships

Relationships are connections between tasks, and Airflow excels at defining them. For instance, take two tasks, T1 and T2. If you want T1 to get executed before T2, you must communicate that to your executor, which means defining their relationships.

This typically involves statements like:

- T2 << T1

- T1 >> T2

- T1.set_downstream (T2)

- T2.set_upstream (T1)

By the way...

Do you know how to conduct incident response planning?

In this article, we will tell you what would happen with your system if you're not responding to incidents properly and how to plan this activity correctly

Let's seeA Quick Example of a Typical Airflow Code

The following is one of the simplest examples of Airflow coding.

Basic Requirements

Before we walk you through coding in Apache Airflow, here is a list of what you need to have:

A docker

- Linux/Mac OS or Windows (WSL)

Setup

Create a new directory for your Airflow project (e.g., “airflow”).

- Next, add the file docker-compose.yml and add content from the following file:

https://airflow.apache.org/docs/apache-airflow/2.3.0/docker-compose.yaml

- Then create a new file .env with the below text and replace uid with your user id (you can use the id command with your username):

AIRFLOW_IMAGE_NAME=apache/airflow:2.3.0

AIRFLOW_UID=

The final step is to up Airflow via:

docker-compose up

And there you have it!

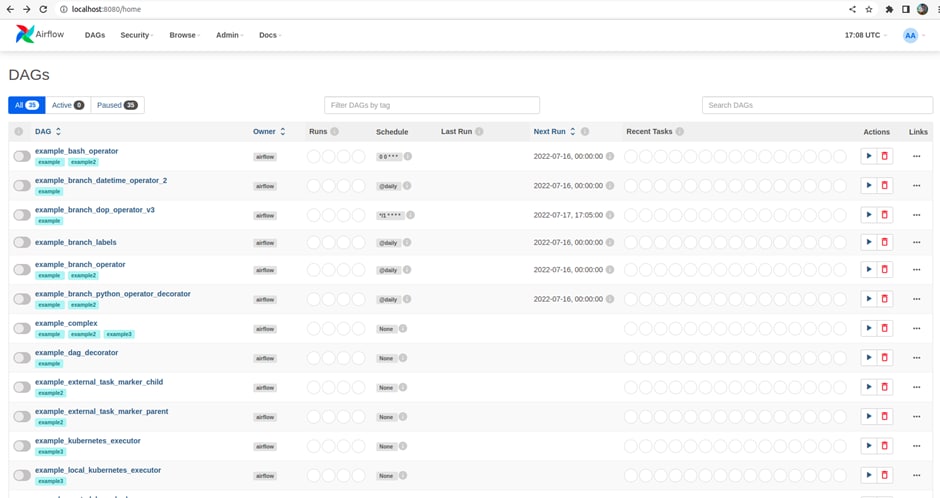

Go to http://localhost:8080/ and log in using default credentials:

Username: airflow

Password: airflow

Creating Your First DAG: A Step-by-Step Guide

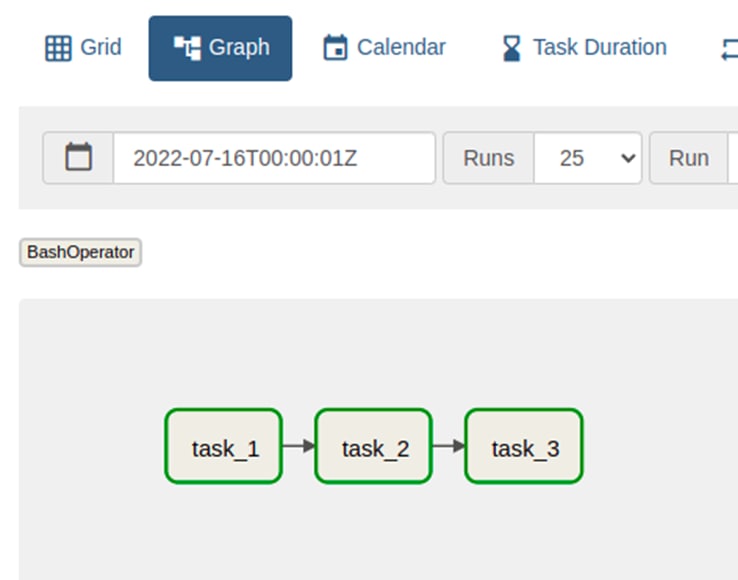

We’ll use a simple DAG in this tutorial, and the tasks will be to:

- Print the “Hello world!” message

- Wait 10 seconds

- Print the current date and time

So, let’s dive right in!

First, create a Python script file named simple_dag.py inside your DAGs folder. In this script, we must first import some modules:

from datetime import datetime

from airflow.models import DAG

from airflow.operators.bash import BashOperator

Next, create a DAG object by specifying some basic parameters:

default_args = {

'start_date': datetime(2022, 1, 1)

}

Now, it is time to tell your DAG what it should do. But, first, you must declare the different tasks — task_1, task_2, and task_3.

In addition, you must also define which task depends on the other.

with DAG('simple_dag', schedule_interval='@daily',

default_args=default_args, catchup=False) as dag:

task_1 = BashOperator(

task_id='task_1',

bash_command='echo "Hello world!"'

)

task_2 = BashOperator(

task_id='task_2',

bash_command='sleep 10'

)

task_3 = BashOperator(

task_id='task_3',

bash_command='date'

)

task_1 >> task_2 >> task_3

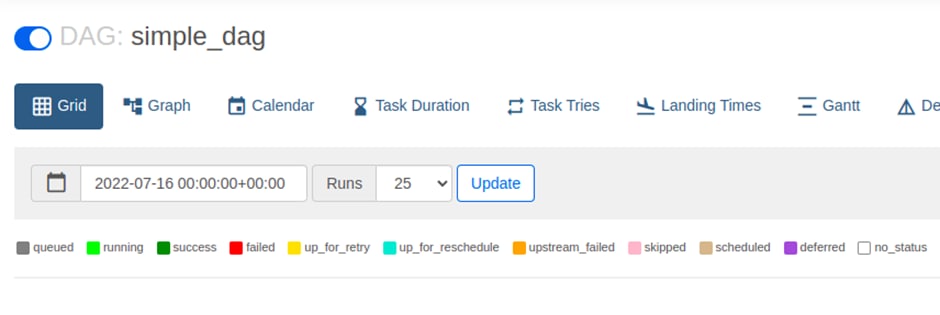

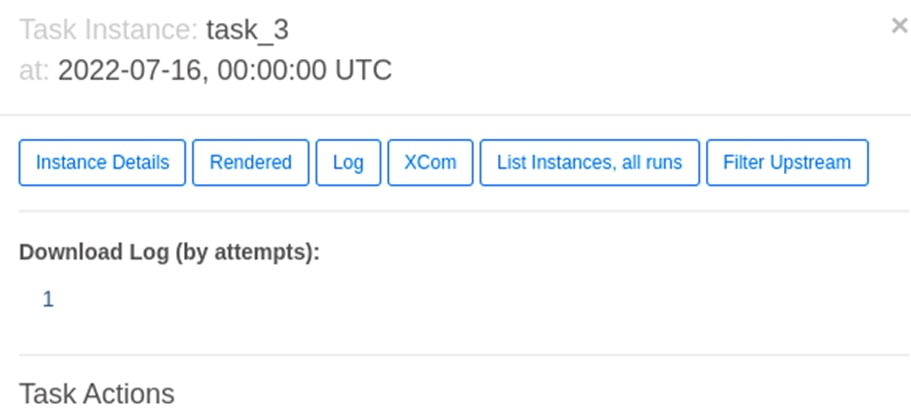

Testing the DAG

Now, let’s walk you through how to see what the DAG looks like and, more importantly, see if it works.

First, go to http://localhost:8080/

- Next, find the DAG, click on it, and unpause:

- To check if your DAG is working correctly, go to the Graph tab and confirm that all tasks are done (they should have a dark green border).

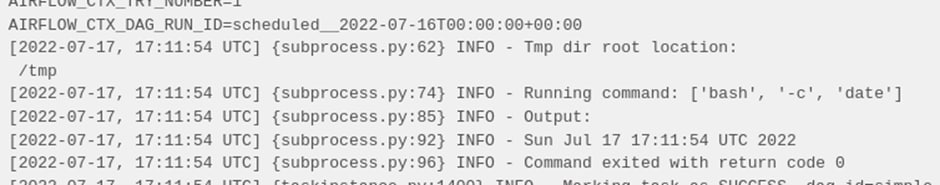

- Click on task_3 and go to Log.

Here, you will see that the current date and time were printed (the INFO line after Output line):

You just created and tested your first DAG. Congratulations!

Cloud Solutions (MWAA vs. Google Cloud Composer vs. Astronomer)

We’ll compare these cloud solutions using three criteria: ease of setup, customizability, and executor limitations.

Ease of Setup

Of the lot, Astronomer is the most challenging to set up. On the other hand, Amazon Managed Workflows for Apache Airflow (MWAA) and Google Cloud Composer require only a few clicks, and you can set up and start running Airflow in less than 30 minutes.

Customizability

This is where Astronomer shines. It is much easier to edit your Python libraries in Astronomer than in either Cloud Composer or MWAA.

So, it’s about deciding whether you want more flexibility or faster DAG deployment.

Executor Limitations

Executors play a critical role in determining how the tasks in your Airflow instance are managed. Therefore, you must consider your executor when choosing a cloud solution, as the wrong one may affect your work speed and scalability.

On the one hand, Astronomer supports Local, Celery, and Kubernetes executors, while MWAA and Google Cloud Composer only offer Celery executor.

Making a Choice

If you only have to build simple DAGs, MWAA or Google Cloud Composers should be enough to get the job done. However, when it comes to more complex projects and control requirements, Astronomer is hard to beat!

Do you know?

Fiddler Web Debugger: how to become a pro

Become an expert in using Fiddler with our tips based on real experience. Only essntial information from Geniusee specialists

Show meThe Best Practices for Using Airflow

Here are some salient points to consider when using Apache Airflow:

The Composition of the Management

- Take advantage of built-in systems, such as connections and variables.

- You can also use non-Python tools in Airflow.

- Make sure to target a single source of configuration.

Fabricating and Cutting the Directed Acyclic Graph

- You should also have one DAG per data source, per project, and data sink.

- Always keep the DAG code in the template files.

- Use the Hive template for Hive.

- If you’re performing a template search, use the template search path.

Generating Extensions and Plugins

- Write your plugins and extensions from existing classes, and then adapt it.

- Operators, hooks, executors, macros, and UI adaption (views, links) are the related extension paths in this case.

Generating and Expanding Workflows

- There are three different levels to consider for your database: personal level, integration level, and productive level.

- At the personal level, use the “Airflow test” for your testing.

- At the integration level, you need to carry out performance testing and integration.

- At the productive level, this is where monitoring happens.

Accommodating with the Enterprise

- Consider using Airflow’s workflow tools for scheduling.

- Leverage Apache Airflow’s built-in integration tools.

Need IT Consulting Help?

Here’s why you should consider Geniusee:

We help you save money and time.

- We take some of the workload off you so you can focus on your business values.

- We help you expand your area of expertise.

- We help you boost your ROI.

- We improve your business processes and system to ensure higher productivity.

Wrapping It All Up

Apache Airflow fills a gap in the big data ecosystem by providing a more straightforward way to define, schedule, visualize, and monitor the underlying jobs needed to operate a complex data pipeline.

Hopefully, this article has given you a detailed Apache Airflow overview and insight into why it is so popular among business owners today.