It’s well-known that an artificial intelligence (AI) model’s performance depends entirely on the training data. Poor data quality (e.g., blurred or wrongly identified training images of self-driving cars) can lead to misidentifying stop signs, and pedestrians may cause grave accidents. High-quality labeled data is crucial, but self-supervised learning is the common technique used to learn with unlabeled data in modern AI models.

However, some data must be precisely labeled, which is crucial for compliance-driven industries that require transparent and unbiased AI. Companies in highly regulated sectors depend on accurately marked data to meet ethical standards and minimize bias in AI-driven decisions. Still, The Economic Times predicts the market value will exceed $8.22 billion by 2028 due to increased demand for high-quality training materials.

To stay competitive, businesses must optimize data-labeling workflows and avoid costly inefficiencies. This article demonstrates step-by-step instructions about data pipeline development, the value of data labeling tools, best practices, and recognizing essential mistakes that should be avoided. Let’s get started!

What is AI data annotation?

Machine learning and AI systems require data labeling to convert raw information into labels, which they understand through this process. During data preparation, AI systems make raw data useless because wrong annotations leave the main data unstructured.

AI’s capability depends entirely upon the quality of data it receives for learning purposes. The accuracy of annotation leads to the proper interpretation and how AI understands the provided information.

The rising need for AI-powered technologies has led many companies to spend resources on data annotation approaches to improve their model accuracy outcomes. To achieve dependable AI performance, you must start with accurate data marking. It serves as the core foundation of reliable AI performance no matter what area of AI and ML models you work in.

These models require data labeling as an essential step because it delivers annotated data examples, which enables them to identify patterns, automate tasks, and produce predictions.

Thank you for Subscription!

Types of data annotation

The choice of annotation techniques depends on the kind of provided data and the specific use of a predictive model. AI annotation takes several primary forms that appear most frequently in practice.

1. Image annotation

When performing image annotation, one annotates objects while marking boundaries and features for AI systems to identify visual patterns inside images. The annotation technique exists in many applications, including autonomous vehicles, facial recognition systems, and medical imaging. The three primary methods of image marking consist of these:

The detection process requires technicians to label data or objects with bounding boxes representing rectangular shapes.

The semantic annotation strategy assigns each pixel to a specific class for detailed scene understanding.

The keypoint annotation method marks important points on objects, such as facial landmarks.

2. Video annotation

Video annotation serves the same purpose as image annotation, yet it operates on constantly changing video frames. Tracking objects over time requires AI to receive labeled video data, typically for data security of surveillance applications, sports analysis, and autonomous systems. Here are two video annotation methods:

The marking annotations on every frame that composes a video constitute frame-by-frame labeling.

The identification and tracking of moving objects between frames make up this technique.

3. Text annotation

AI’s ability to grasp language depends on text annotation, which enables search engines, sentiment analysis systems, and chatbots to function correctly. Some key techniques include:

The process, known as named entity recognition (NER), identifies names together with dates and locations.

Transcript annotation systems allow developers to identify and group users’ different purposes when using chatbots.

Text annotation requires sentiment analysis, which labels text according to its positive, negative, or neutral sentiments.

4. Audio annotation

AI systems that process sounds through information provided by audio labeling differentiate speakers from emotions and background noise signals. Voice assistants, call center analytics, and speech-to-text apps need this tool for proper functionality. Standard annotation involves:

Speaker identification by labeling different voices in an audio file

Spoken word conversion into written text functions as one of the speech-to-text transcription methods

The system identifies acoustic occurrences like alarms, footsteps, and traffic noises through acoustic event detection

5. Time series annotation

AI obtains financial and sensor data with time series annotation before identifying underlying patterns in these types of information. The system enables developers to find recurring developments and unanticipated patterns over time. Organizations employ this annotation method extensively to detect fraud, conduct predictive maintenance, and assess stock markets.

Benefits of AI data annotation

AI requires effective data annotation investments for proper training implementation. Combining high-quality annotations drives more acceptable accuracy levels and strengthens model capabilities, ensuring dependable AI predictions. Here’s how AI data annotation adds value:

1. More accurate AI predictions

Think of AI-powered medical diagnostics. Annotation mistakes can cause a tumor identification failure that results in an incorrect medical diagnosis by the model. Proper medical imaging annotation produces accurate AI performances of X-rays, MRIs, and other medical scans, thus achieving positive patient healthcare results.

Proper audio labeling improves virtual assistant performance by enabling more effective recognition of various accent types and speech patterns.

2. Faster and more efficient AI training

Using unstructured data to train an AI is similar to providing instructional materials to students without textbooks during lessons. A fraud detection AI system misidentifies numerous genuine transactions because it does not receive sufficient data that contains precise labels.

This causes performance losses and unhappy customers. Efficient AI learning reduces training time because precise data labeling creates better system operation results.

3. Scalability for AI-powered solutions

AI apps are getting larger, so we need more data labeling capabilities. Therefore, self-driving car organizations accumulate vast amounts of data every day. The lack of a reliable data annotation scalability mechanism would create difficulties for these companies. Businesses deploying AI annotation systems, process large-scale data, maintaining accurate, up-to-the-minute AI functionality.

4. Improved user experience in AI applications

Have you ever experienced a recommendation system that grasps the preferences you like? High-quality training data is the basis for AI-driven experiences, such as movie recommendations on Netflix and predictions through e-commerce platforms. Sentiment and intent annotation enable AI to have context and feelings, personalize them, and make the interaction more engaging.

5. Ethical AI development and bias reduction

AI bias is a serious issue. An AI recruitment software uses imperfect training data to discriminate between different groups of candidates during the hiring process. The lack of dataset diversity and faults during annotation procedures lead to such situations.

Almost 6 years ago, Amazon discontinued its AI recruitment tool, which showed a preference for male candidates during the candidate selection process. The system was trained on employee resumes that Amazon has collected over 10 years, predominantly from male candidates, reflecting the tech field’s historical bias toward men. The AI system penalized resumes incorporating the term “women’s” while favoring apps referencing masculine professional experiences.

The system displayed biased behavior toward male candidates; it acquired knowledge from historical data that wasn’t objective. So, proper data annotation plays unbiased AI models because it confirms that datasets reflect a wide range of perspectives while abiding by ethical standards for AI.

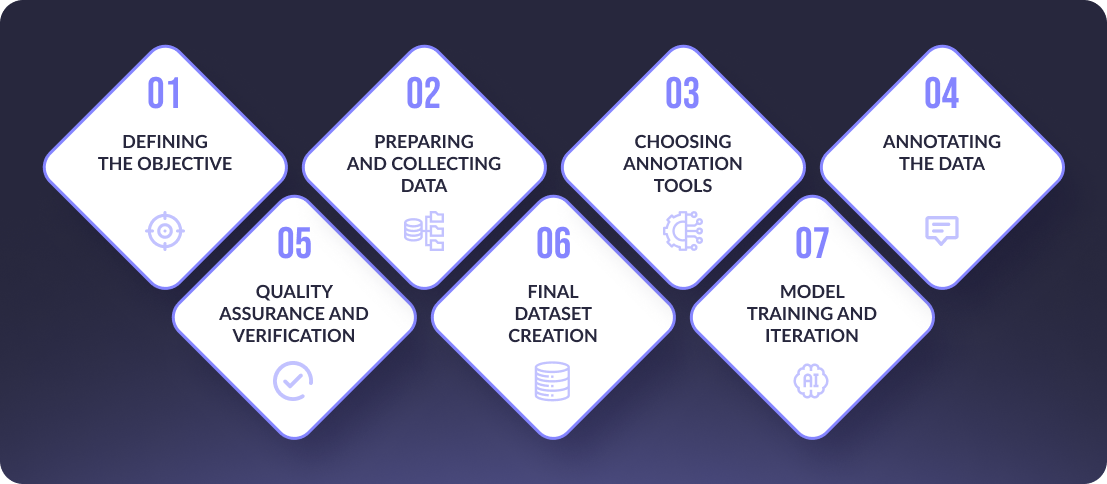

The process of AI data annotation

Embark on the AI process of labeling data with the understanding that it is not a one-size-fits-all approach. You will know that this approach consists of some tailored stages so that the final dataset is meticulously labeled, reliable, and ready to train the machine learning models. So, how to do data annotation? We will break the annotation guidelines step by step to explain what this all looks like:

1. Defining the objective

To annotate data, you must know what predictive model you will train. You are doing research on an image annotation task for an autonomous vehicle. Do you want to label the text data for sentiment analysis or use a predefined set of labels? A clear objective allows your data to align with your AI'’s precise goals, reducing the time and effort required for a proper process.

The way you are working with the data will determine the annotation strategy:

Determine your end goal: Describe what your predictive model should understand.

Annotate them: Determine how all annotation types will be done in your data.

Define an annotation plan: Establish how to annotate consistently from beginning to end to maintain a consistent goal throughout the process.

2. Preparing and collecting data

After deciding on the objective, data collection becomes the second step. Your AI will work properly during its live deployment only when the gathered data accurately mirrors real-life situations. Still, the AI data annotation project will need different kinds of data collected from several distinct information sources.

Images or videos are the foundation for annotation activities requiring identifying objects or activities.

Language texts work optimally for sentiment evaluation alongside parts of speech classification.

Audio is an annotation method used when transcribing verbal speech for virtual assistants and voice-recognition systems.

Sensor data represents an example of IoT devices and self-driving cars requiring sensor data annotation.

3. Choosing annotation tools

The following procedure selects appropriate data annotation tools for adequate data labeling from gathered information. Your selected labeling tools must be scalable, intuitive, and robust enough to process the large volume of data that AI needs. Whatever type of data you need to annotate determines the selection of proper markup tools compatible with your AI project’s scope.

AI-powered annotation tools available for widespread use include:

LabelImg for image annotation tasks.

Amazon Mechanical Turk is a data crowdsourcing platform that can also automatically collect AI data pipelines. However, it’s important to adhere to the data annotation platform rules and perform quality control, verifying workers’ qualifications and automated tools. The point is to use proper process management to save time without sacrificing data accuracy.

The Prodigy text annotation platform will help you generate training data and develop its capabilities.

CVAT (Computer Vision Annotation Tool) for large-scale video annotation projects.

4. Annotating the Data

In this phase, data annotators (human-based or machine-based) iteratively start the labeling application process. Annotation is the task of assigning data corresponding labels to help AI recognize and forecast according to patterns. This includes annotating the corresponding data for the project goals.

Here are some examples of annotation processes:

Image annotation: Object marking (e.g., vehicle detection, pedestrian detection, traffic sign detection) in images for driving a system in vehicles.

Text annotation: POS tagging of the words, named entity recognition (NER) (e.g., places, entities, or other classifications), sentiment (positive, neutral, negative) (or other classifications) of the text in natural language processing (NLP) data processing models.

Audio annotation: Transcribing speech to text or tagging emotions in a person’s voice for voice recognition or chatbot systems.

Video annotation: Extracting discriminating actions, e.g., person detection, tracking objects, or recognizing events, from a video stream.

5. Quality assurance and verification

Following data annotation, verifying its correctness is the next most important step. It is crucial because system learning models depend on this categorized information for education. If the information is flawed, the version’s predictions will be wrong. Ensuring the labels’ correctness is important to guarantee that the annotated data meets the stipulated standards.

This can be done in several ways:

Manual review: A second pair of eyes can perform manual annotation to match whether it is correct and consistent.

Automated quality checks: Annotation tools help automate the review process by cross-checking the label or implementing algorithms that can find errors.

Inter-annotator agreement (IAA): If more than one annotator is assigned a dataset, their agreement can be quantified to determine whether the annotations are convergent.

More from our blog

Legal Advisor: AI-powered chatbot for lawyers

Streamline processes and enhance efficiency by up to 60% in your legal organization with our AI chatbot for lawyers.

Read more6. Final dataset creation

After verification and correction, the final dataset is built. This dataset can be used to train your AI and machine learning algorithms. Right now, it is important to organize the data in a way that is consistent with the model’s input format.

Dataset format: Ensure that annotated data is in an appropriate format (e.g., JSON, XML, CSV) for training.

Data segmentation: Depending on the use case, you might segment the data into train, validation, and testing sets.

Data augmentation: In some instances, it may be necessary to construct more data by augmenting the current data set, such as rotating, flipping, or scaling images.

7. Model training and iteration

The last step is to input the marked data into the AI. After training, the model will begin making forecasts. The process of training AI models is iterative. The better the quality of the annotations you supply, the better the AI system’s performance will be; nevertheless, this is not the final step in the process because you usually need fine-tuning.

This may involve:

Retraining: When the model is not working (i.e., the annotated data is incorrect), it may be necessary to review the annotated data, rescale or adjust the label, or acquire more annotation to complete the training data.

Continuous learning: In specific contexts, AI can continually learn from new data annotations as they are added to the training set.

Conclusion

AI data annotation is the backbone of training AI, as the quality of your annotation directly affects the resultant model's performance. Whether an image, text, or video annotation, high-quality data is essential to help an AI system recognize patterns and provide reliable decision-making. Defining a consistent and well-structured data annotation workflow creates the base for solid AI production and machine learning achievement.

Understanding your data type is your priority when creating an effective annotation pipeline. Choose the right tools and techniques for each specific task. With the appropriate infrastructure, training, and continuous validation checks, the AI can correct and consistently train data, sharpening its performance.

If you’re ready to scale AI data labeling and need expert guidance, Geniusee offers comprehensive services tailored to your needs. We can assist you in understanding the intricacies of data annotation so that your AI produces optimal results in multiple industries. Contact us, and let’s build the future of AI and machine learning together