We’re way past the stage where anyone needs convincing that data matters. Everyone tracks something. Everyone has dashboards. Everyone says they’re data-driven.

But despite all those efforts, the same issues keep arising:

A student stops engaging — and you notice only two weeks later.

A feature or a whole course underperforms, and you find out at the end of the quarter.

Something falls ineffective, but it becomes clear only once your learners have already dropped off.

And that’s the real problem today. Not the lack of data, but the lack of timely, actionable insight — ideally, even before the actual issue comes up.

Predictive analytics is what can fix that.

The adoption rate of predictive analytics in education is so high that it's expected to be worth $5,892 million by 2034, from $680.1 million recently. And that’s for a good reason.

What exactly is predictive analytics? How can it improve performance in EdTech? And what’s the right way to adopt the approach?

Based on years of experience in education software development, as well as AI-powered app development, we'll explain it all step by step.

What is predictive analytics?

It's not “advanced reporting,” not retrospective analysis, and not just another layer of dashboards. It's about proactively anticipating what’s likely to happen — and acting on it while there’s still time to make a difference.

For example:

Use case | Example |

Recommending personalized learning paths based on behavior | LinkedIn Learning suggests follow-up courses based on skill data, user behavior, and patterns from similar learners. |

Adapting content in real time to match user performance | Duolingo adjusts lessons and offers targeted pronunciation drills when it sees you struggle with certain sounds. |

Spotting patterns that lead to high performance | ClassDojo analyzes behavior logs to surface which interactions correlate with better academic and social outcomes. |

Preventing misregistration in wrong or unnecessary courses | Georgia State prevented 2,000+ students from enrolling in the wrong course based on predictive signals. |

Spotting high-performing content and scaling it | Coursera uses learner data (completion rates, reviews) to highlight and prioritize successful courses |

Alerting instructors when students stop engaging | Lone Star College uses Intelligent Agents to notify instructors automatically when students miss logins or deadlines. |

Personalized tutoring and pacing through AI | Khan Academy’s Khanmigo AI assistant adjusts content and interaction style to fit individual student needs. |

Forecasting enrollment trends and adapting offerings | Marshall University uses predictive analytics to forecast student enrollment and inform institutional planning. |

Optimizing scheduling and faculty assignments | Arizona State University applies predictive models to allocate faculty and adjust course offerings. |

Supporting learners with specific challenges, like dyslexia | Amira Learning provides real-time speech diagnostics and custom learning flows for dyslexic students. |

If that sounds impressive, that’s because it is. All these companies have made the right decisions at the right moment, and continue to act on the results they get from data.

What are the exact benefits and limitations of predictive analytics in education?

Now that we’ve gone through some of the brightest examples, let’s bring it down to the scope of what benefits you can expect from implementing predictive analytics — and where its power ends.

The benefits of integrating predictive analytics into your EdTech product are twofold:

Student & teacher | Organizational |

Higher grades and completion rates | Lower churn and drop-off rates |

Faster identification of learning gaps | Clear ROI from learning outcomes |

Tailored content suggestions | Smarter product iteration |

Timely nudges and support | Higher customer satisfaction and loyalty |

Reduced instructor burden | More effective upsell and content strategy |

Better communication with students | Optimized resource allocation |

Fewer unnecessary interventions | Better forecasting and capacity planning |

A sense of being seen for learners | Stronger brand reputation |

Predictive analytics can be your real leverage — the shift that moves your entire process from reactive to proactive. While others are still wasting time trying to figure out why something went wrong, you’ll be improving things before the issue even shows up.

But let’s be clear — there are still limitations. For example:

No algorithm can fix bad content. Predictive analytics can tell you what’s not working — but it can’t magically make your product better. If the core learning experience is weak, the data will just confirm that.

You still need enough clean data. If you don’t have consistent user activity, engagement signals, or outcomes to work with, predictions won’t be meaningful.

It doesn’t replace human decisions. Predictive analytics can surface patterns. It can recommend actions. But someone still has to decide what to actually do about them — and when not to follow the algorithm.

Context matters. A lot. The same behavior may mean different things in different environments. A missed quiz isn’t always a disengaged student — sometimes it’s just travel or illness. Prediction doesn't equal judgment.

It works best as part of a system — not a patch. You get the real value when predictive analytics is built into your product logic, content delivery, and support workflows — not tacked on as an afterthought.

Taking all of that into account, predictive analytics isn’t a silver bullet — but it is a serious multiplier. It won’t solve your core problems for you, but it will show you exactly where they are, how they spread, and when to act. And it will be your powerful tool in building an education product that anticipates problems, adapts in real time, and gets better with every learner.

More from our blog

LMS implementation checklist: From planning to launch in 5 clear steps

Explore a comprehensive LMS implementation checklist to streamline the platform deployment.

Read moreHow does predictive data analytics work?

Imagine this:

A student used to log in every weekday and score ~80% on quizzes.

For the last 4 weeks, they’ve logged in just once a week.

The algorithm trained on thousands of similar patterns identifies a drop in engagement as a precursor to course failure.

It flags this learner as at-risk.

An alert is sent to the instructor, and a chatbot proactively engages the student.

The platform adjusts content difficulty or pace.

The student re-engages and avoids failure.

This is only one sample scenario, and there can be dozens, hundreds, or thousands of them, depending on what your project needs and scale.

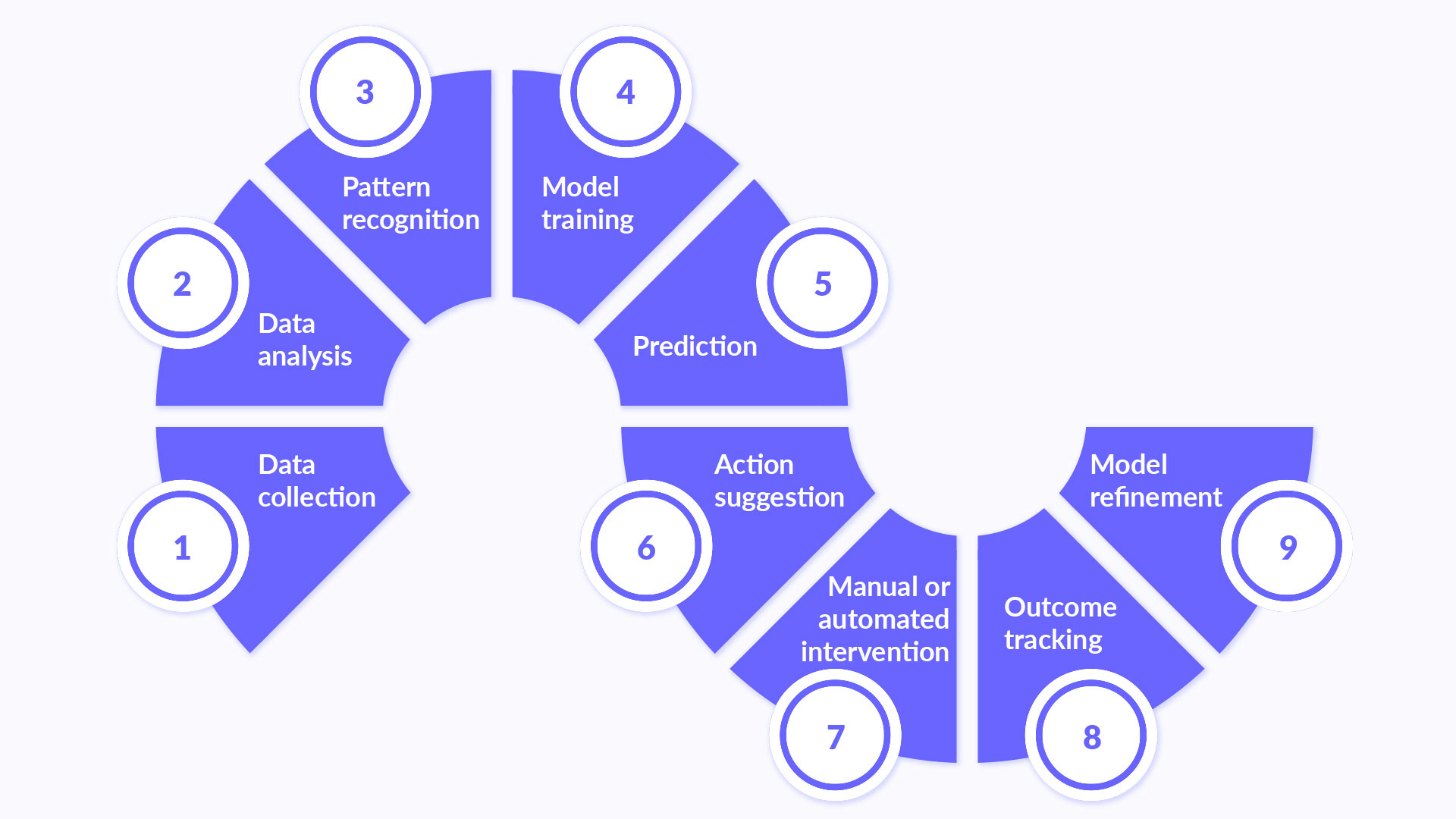

Here's how the whole thing works:

1. Data collection. The system gathers both historical and real-time data: quiz results, logins, clicks, time on task, skipped content, and etc.

2. Data analysis. This raw input is cleaned, sorted, and structured so it can actually be used.

3. Pattern recognition. It identifies behavioral trends across large datasets, often using AI features to spot early signals that typically precede success or failure.

4. Model training. The system learns from those patterns using statistical methods and machine learning — basically figuring out what usually leads to what.

5. Prediction. When new data comes in, the system applies what it has learned to detect what’s likely to happen: e.g., a drop in engagement, a risk of churn, a missed learning objective.

6. Action suggestion. The system recommends what to do — it can be an alert, a nudge, a change in pacing, a content adjustment, or anything else — while there’s still time to impact the outcome.

7. Manual or automated intervention. Someone acts on it: a teacher, a product manager, or the system itself.

8. Outcome tracking. It checks what happened next. Did the learner bounce back? Did the problem get worse? That feedback matters.

9. Model refinement. The system learns from that feedback and adjusts. Over time, it gets better at knowing what works, and when.

And the best part?

Predictive analytics isn’t only about spotting problems. It’s just as useful for finding — and scaling — what’s working well. |

The same system that detects early signs of disengagement can also highlight the patterns behind success: the feature that improves outcomes, the content format that keeps users engaged, or another small thing that makes a big difference.

And once you know what’s working, you can do more of it.

Why do most still struggle to implement predictive analytics in EdTech?

Despite the promise, implementation often fails or stalls at the level of dashboards, engagement heatmaps, and reactive “insights.”

Why?

Because building prediction into a product isn’t just about plugging in an algorithm. And here are the main blockers:

No clear objective. Teams want to “use data,” but never define what they’re trying to predict, or how that prediction will drive action.

Fragmented or messy data. If user data is scattered across systems, inconsistent, or incomplete, the model has no solid foundation.

Lack of clean feedback loops. Predictions get made, but outcomes aren't tracked — so the model never learns what actually worked.

No integration into actual workflows. Predictions don’t plug into content delivery, pacing, support, or operations — so nothing changes.

Stuck at the dashboard stage. Teams collect “insights” but don’t connect them to any intervention logic or product decisions.

Lack of ownership. Predictive analytics lives in a silo, with no one owning it across product, pedagogy, data, and support.

No one to translate insights into action. Even when good predictions happen, there’s no clear process or team to act on them.

Overcomplicating the first step. Teams go straight for full AI before getting the basics right: goals, data structure, and response logic.

That’s why most teams stall. They launch dashboards, build “insight layers,” maybe even run some predictions and still never change the actual outcome.

So if you’re serious about doing this right, based on all of our experience, we recommend starting with the fundamentals.

Thank you for Subscription!

How to implement it in your EdTech?

Here’s a simple flow we recommend to most companies that haven’t implemented predictive analytics yet but are willing to start.

It’s not based on theory, wishful thinking, or overcomplicated tooling — it reflects what actually works in real EdTech products when you need to move fast, stay lean, and get real outcomes.

Define one clear, high-leverage goal | Do you want to reduce drop-offs? Increase completions? Surface better content recommendations? Pick something that matters and that you’re actually ready to act on. |

Identify the data signals tied to that goal | Look at what you already track and what you don’t. What signals actually connect to the outcome you care about? If key inputs are missing, start tracking them now in a clean, structured, and usable way. |

Map the prediction-action loop | This is where many teams fail. If your system predicts a risk, what exactly should happen next? Is it an automated alert? A content adjustment? A trigger for human intervention? If there’s no action tied to the prediction, it’s just a decoration. |

Choose the simplest model that can do the job | You don’t need a complex neural network to flag disengaged users — at least, not at the first stage. A basic model that gives you clear signals is usually enough to start. When it works, you can improve further. |

Test on a closed loop and measure the outcome | Pick a group. Run predictions. Trigger actions. Track what changed. Did the thing improve? If yes, scale the test. If not, check whether the prediction was wrong or the action wasn’t effective — then fix and rerun. |

Refine based on what actually works | Feed the results back into the system. Adjust thresholds, update triggers, and learn what signals actually lead to better outcomes. Every round should make the next one smarter. |

Build it into your product logic | Decide where the predictions should actually land: course structure, pacing, support flow, user messaging, reporting layer. Then hardwire them in. Make sure predictions trigger something real inside the user journey or internal workflow. |

As small as this first stage may sound, it gives you a real foundation for improving both educational and organizational processes in your EdTech product, while helping you avoid the major pitfalls that make most companies stall and waste time, money, and trust.

If you want to figure out what these first steps might look like for your company — what outcomes to expect, what tech stack to use, how much time and budget it may take — feel free to request a free consultation.

We’ll help you build a realistic, transparent roadmap grounded in the product work we’ve done building, integrating, and scaling predictive systems and AI inside actual EdTech products.

Why trust Geniusee?

We are a software development company with 180+ successfully completed projects, many of which were education software development projects. One such client was Booster Prep, an interactive e-learning platform for medical school applicants used by 80,000+ students worldwide. You can see other related projects in our portfolio.

All that makes us people who know the domain, have hands-on experience with relevant tech and can really help if you have questions or are looking for an experienced technical partner to support your business.

So, if you need our expertise — don’t hesitate to reach out. We’ll be happy to help ensure your EdTech project has strong, reliable technical support behind it.

Final thoughts

Predictive analytics isn’t about ticking all the AI boxes or building another dashboard. It’s about giving your product the ability to see problems early, act faster, and improve over time.

Whether you’re just starting out or trying to make an existing system smarter, even a simple, working prediction-action loop can make a real difference — often more than chasing complexity and getting stuck for months or years.

So: start small, stay focused, make it real. And if you need help, we’re here.