In recent years, artificial intelligence has gained significant traction in the legal sector. According to a survey by the American Bar Association (ABA) (2024 ABA Legal Technology Survey Report: Combined), the share of lawyers using AI technologies surged from 11% in 2023 to 30% in 2024, an almost threefold increase in just a year.

This trend is mainly driven by the desire to boost productivity and efficiency, save time, and deliver more impactful value. AI-powered tools can manage document reviews, conduct legal research, analyze contracts, and more.

Indeed. According to the Thomson Reuters Future of Professionals survey, the key takeaway is AI's potential to save lawyers 4 hours per week, which is equivalent to 200 extra hours a year. This translates to approximately $100,000 annually in new billable time per lawyer.

So, let’s see how these advancements impact the practical life of lawyers, what the implementation options are, and how Genuisee developed a solution to relieve the burden of legal professionals.

Real-life use cases in the legal sector

The chatbot market has experienced significant growth in recent years, driven by its ability to streamline processes and enhance efficiency. The numbers speak for themselves: the chatbot market size was valued at $5.4 billion in 2023 and is projected to rise at a CAGR of 23.3% from 2023 to 2028. The revenue forecast for 2028 is projected to reach $15.5 billion. Here are some proven successful cases:

For example, DoNotPay, an AI lawyer's chatbot, was created to appeal parking fines and help users file appeals against penalties for free. In just 21 months of operation, DoNotPay has reportedly won 160,000 out of 250,000 ticket cases (~64% success rate), saving drivers an estimated $15 million in fines.

Visabot is an AI bot that helps with immigration issues by automating a significant part of the process. According to the developers, the bot can automatically perform about 80% of the typical steps of the visa process.

AI for in-house lawyers: The Genuisee Legal Advisor

Recognizing the increasing complexity of legal workflows, we’ve decided to build an internal Legal Advisor chatbot tailored to the unique needs of in-house legal teams. We chose the legal sector to streamline the lawyers’ workload when drafting contracts, ensuring adaptability to the dynamic regulatory changes and offering employees up-to-date answers.

Our goal was to create an AI-powered assistant capable of delivering accurate, context-aware legal responses drawn from internal documentation while maintaining the highest security standards.

Why not use another, more common approach?

Building such a solution may not be justified at first, as there are more straightforward ways to solve this problem. Consider integrating the Programmable Search Engine or using more modern approaches based on large language models (LLM), such as the LlamaIndex. However, all these solutions share similar challenges, which can be categorized into several key areas:

Data security & compliance

According to security standards, not all of your data can or should be shared with third parties, even if you are willing. For example, internal documents related to HR policies or legal contracts may contain sensitive information that should remain confidential.

Personal data leakage in prompt processing

A significant risk when transmitting data to third-party services lacking the necessary compliance levels is that these services may use your data to train new models. This poses a threat, as competitors could gain access to your proprietary data through advanced prompt engineering techniques.

Data is your oil

AI companies are constantly seeking new data sources. Your internal documents and data contribute to your company's competitive advantage. By sharing them, you risk giving away part of your intellectual property. This issue becomes even more concerning when the data pertains to your product’s users. In such cases, external parties may extract valuable industry insights without direct access to the data by leveraging models trained on your data.

Domain-specific adaptation of AI

One of the challenges of AI is that it is not inherently domain-adapted. It is often necessary to incorporate domain expertise into the prompt to ensure that it provides relevant responses. Usually, we recommend resolving that with proper prompt engineering or finetuning.

Sovereign AI and language bias

Sovereign AI refers to a nation’s ability to develop AI using its infrastructure, data, workforce, and business networks. General AI models can be problematic because they perceive the world through the languages they were trained in. If a language were underrepresented or absent during training, those more prominently featured would dominate, shaping the AI’s worldview accordingly.

Key features of the Legal Advisor chatbot

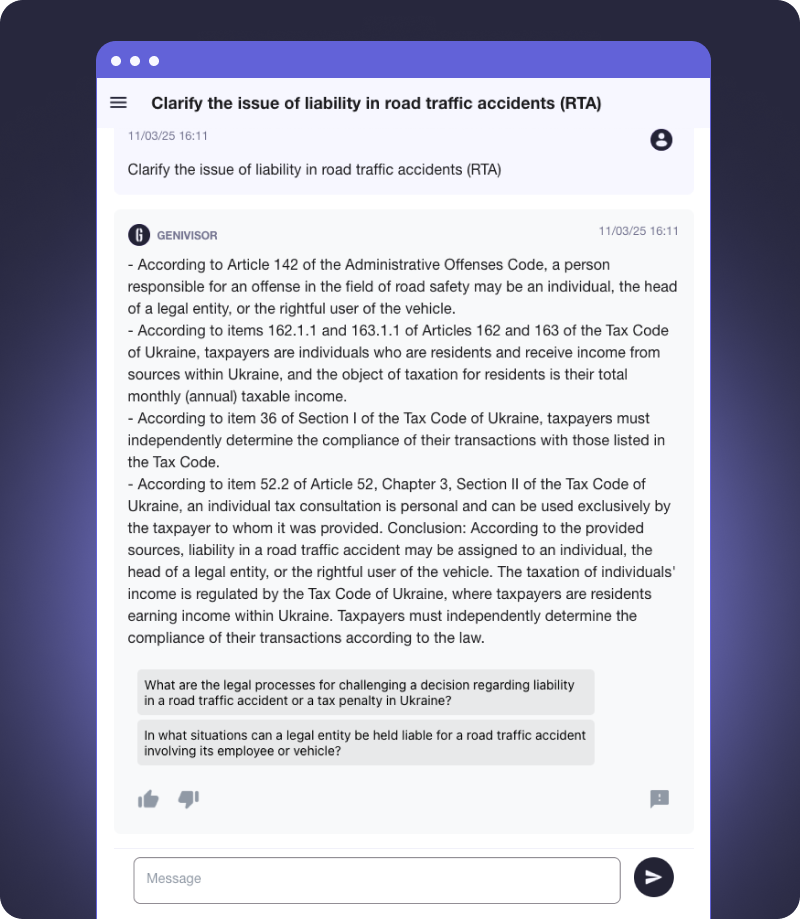

In addition to a standard chat, we aim to enhance user interaction and address several key challenges. Besides accurate responses, our goal is to enhance efficiency and facilitate intuitive engagement.

Below are the main features we’re working on to implement:

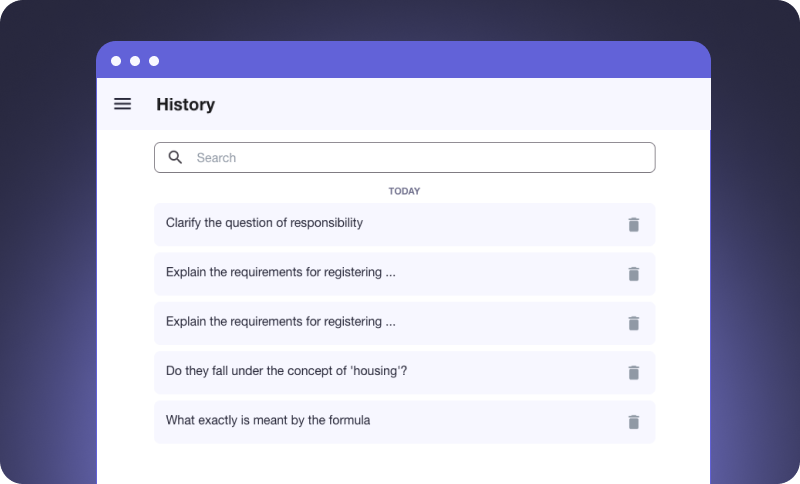

History to review

While this may seem like a simple feature, it is crucial that if a document is updated or modified, the chat history reflects the version available at the time of the conversation rather than the updated one. This will allow users to review previous discussions in the correct context of the corresponding document version.

Follow-up questions

An essential element of a user-friendly UI/UX is the ability to easily continue the conversation without manually entering clarifying questions. One of the most convenient approaches is automatically generating relevant follow-up questions that users can select in a few clicks. This will create the effect of AI anticipating the user's intentions, making the interaction more natural and intuitive.

Provide feedback

Building trust is crucial in the legal industry. One of the approaches to strengthening it and developing the product was introducing a feedback mechanism. We decided to allow users to rate answers and provide feedback on their relevance. This will enable us to improve the system, provide a better user experience, and interact more effectively with users in this format.

System design and architecture

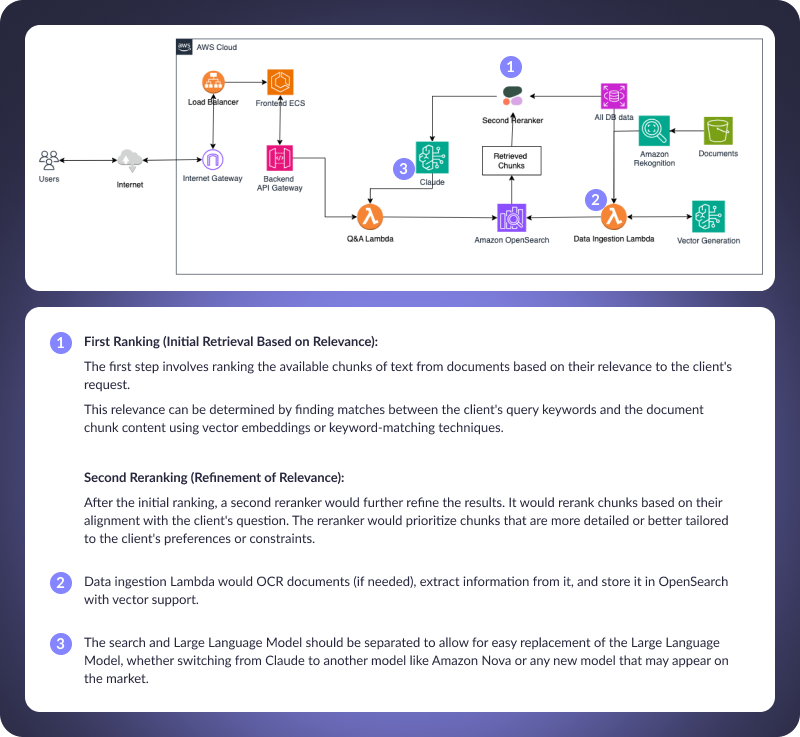

When designing the system, it was essential for us to ensure a smooth system reuse, data security, and scalability, allowing the client to self-manage data. For this purpose, we applied AWS to meet these requirements.

The system is built on the AWS cloud infrastructure and includes several main components:

Tech components | Function |

Frontend ECS | Web interface for users |

Backend API gateway | A gateway for processing requests from users |

Q&A Lambda | A function for processing queries using the Large Language Model (LLM) |

Amazon OpenSearch | A database for storing and quick searching for documents |

Data Ingestion Lambda | A function that preprocesses documents, including OCR and vectorization |

Amazon Rekognition | A service for text and object recognition in images |

Vector Generation | A mechanism for creating vector representations of documents for improved search |

Second Reranker | An additional level of search results ranking |

The system is structured to process, store, and retrieve legal documents efficiently, leveraging AI-driven search mechanisms, document indexing, and automated data ingestion workflows. Each component plays a crucial role in optimizing search accuracy, response generation, and compliance with legal data privacy standards.

Let’s jump right into each step:

Searching for information (first level of ranking)

After receiving a request, the system performs an initial search in the document database. Amazon OpenSearch is used to find relevant text fragments quickly. The system compares the user's query with text and vector representations of documents. The first level of ranking selects the most relevant fragments.

Refining search results (second level of ranking)

After the initial search, the most relevant documents are selected, and the system applies an additional level of processing. The Second Reranker analyzes the obtained fragments, evaluating their relevance to the query. This process can use methods of additional semantic analysis and prioritization. The results are transmitted to the user through the Q&A Lambda, which can call LLM to generate answers.

Loading and processing new documents

Data Ingestion Lambda receives new documents and starts processing them. If the document contains images, the system calls Amazon Rekognition to perform Optical Character Recognition (OCR). The extracted text and structured data go through the Vector Generation process.

Indexing in OpenSearch

After processing, documents are stored in Amazon OpenSearch in two formats:

Text indexes – for classic search queries.

Vector representations – for deeper semantic search.

This approach strikes a balance between search speed and accuracy.

If I have specific requirements, how can you help me to meet them?

We understand that each company has unique requirements that need a special approach toward customization. Whether you need to integrate the legal assistant with your legacy system, process different data formats, or ensure seamless compatibility with internal workflows, we design the system tailored specifically to your needs.

Based on our experience collaborating with legal organizations, we outlined the main challenges (or call them potential scenarios) requiring a flexible and adaptable approach. Let’s go through them:

X All my data is stored in a specific infrastructure.

Not all organizations operate in the cloud; some may require on-premises or hybrid cloud solutions to comply with internal security policies or regulatory requirements.

✅ Our system can be deployed on AWS, private cloud, or on-premise environments, ensuring that your legal data remains within your controlled infrastructure.

X My company works with different types of data (audio and video)

All the recorded information in your internal meetings or briefings needs processing to extract insights.

✅ We enable the system to transcribe key data and incorporate it into the legal knowledge base.

X Our regulations are stored on a WordPress site.

Many organizations manage internal policies, legal documentation, and compliance guidelines through CMS platforms like WordPress.

✅ We can integrate the AI chatbot with your existing website. It can automatically extract content, index, and search so your team can query real-time updated regulations directly from the chatbot.

X Decisions made during meetings should trigger policy updates.

In many organizations, policies, and regulations evolve based on meeting discussions and voting outcomes.

✅ Legal Advisor can detect when a new decision impacts existing legal frameworks and notify users that specific policies must be updated accordingly. This ensures that regulatory documents always reflect the latest decisions and compliance requirements.

Need a custom solution?

The challenges legal teams face extend far beyond this list. Our tech specialists are working continuously to enhance the chatbot, addressing additional requirements and pain points of in-house legal teams. Let us tailor architectures to fit your exact needs. Consult our specialists to integrate AI systems into your existing workflows easily and efficiently.