Visualize this: you’re close to bringing your company to the next level of innovation, but you don’t know what stands in your way. Your AI-powered tools are great, but you’re not precise enough or insightful enough to meet your users’ expectations. The culprit? Ineffective prompt technique.

Perfecting prompt engineering in artificial intelligence (AI) is not a luxury but a must. That’s why so many companies fail to get the most out of their AI models. Crafting the proper prompts is a lot harder than it looks.

That’s where we come in. Building the latest and greatest technology and experiencing failure is frustrating — we get it. With our practice of prompt engineering, we help startups and growing enterprises convert AI tools to precision-driven assets.

In this guide, we dive into the pain points of prompt optimization, outlining popular practices and examples of its usage. Let’s get started.

What is prompt engineering, and how does it matter?

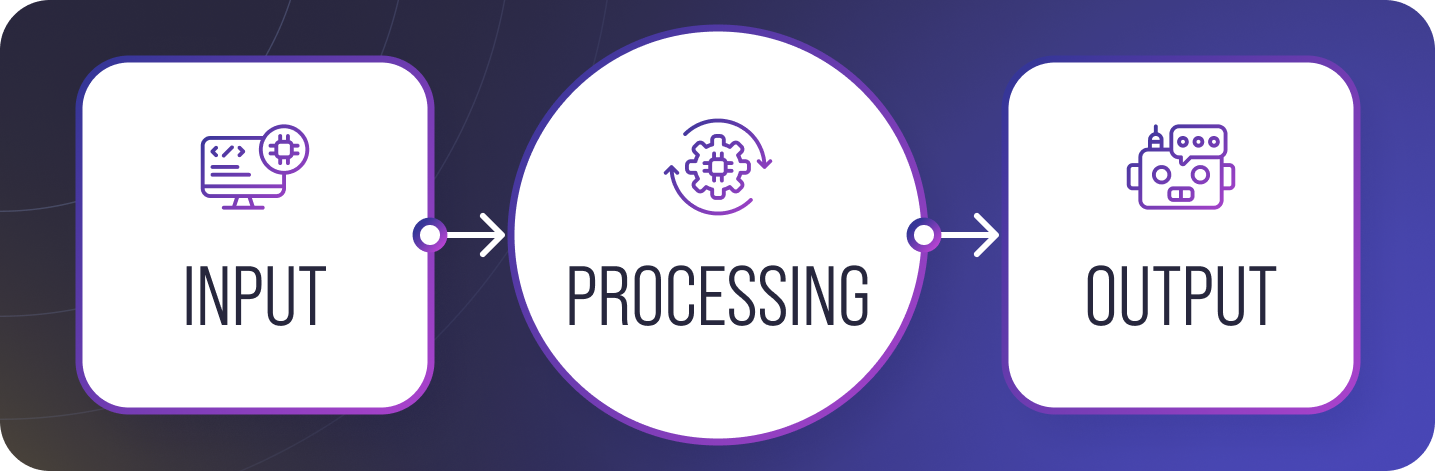

Simply put, prompting is a technique for creating precise, practical inputs to steer AI models like ChatGPT or OpenAI large language models (LLMs) at its heart. Prompt designing is the foundation for deriving meaningful and accurate results from generative AI. A good prompt can radically change how AI gives back. As you can see, proper prompt engineering offers a deliverable manner that is as you would like.

For natural language processing (NLP) enterprises, the nuances of these inner workings of a language model are a mystery. With changes in a prompt, sometimes a single change for a task prompts word gives better results and improvements. Even the finest, most up-to-date AI models generate a suspicious output that lacks insight, helpful information, and common sense.

The role of prompt engineers in AI Success

A sound prompt engineer already intuitively understands that AI models’ mapping between input and output is not linear but dynamic. It’s like they know how LLM works, and their job is to use deep insights into what LLM is capable of doing and what it does badly to craft prompts that minimize limitations and extend the capabilities of AI.

AI prompt crafting is, for example, a key technique to get a feature engineer for your customer service, content generation, or predictive analytics tool.

Best practices

The trick is that prompt engineers must understand what and how to ask. Still, prompt engineers can guide AI in creating new solutions. The phrasing of a prompt and how you phrase it certainly affects the performance of large language models (LLMs) and how they respond. We want to pick a few of the most effective techniques for the prompts that will help generative AI do its job better and more reliably. Here are some of our prompt engineering strategies:

1. Clarity and precision

Assigning roles to AI is one of the effective techniques for prompt engineering. This tip is about telling the model to pretend to be someone or, from someone’s perspective, you’ve implicitly steered it in a direction that makes sense. For example:

“You are a financial advisor. Advice for a starter with $1,000 to invest.”

It helps the AI deliver relevant answers for applying prompt engineering and getting specialized answers.

2. Richer responses

Prompts don’t spark the imagination as much as context does, so adding context to prompts will help the model construct more meaningful and targeted responses.

Say, how do I improve my website instead of asking how I improve my website?

Your prompt might be asked in a more layered manner:

“With the above described as a digital marketing consultant, suggest an SEO strategy to improve a small e-commerce website’s ranking and engagement.”

Otherwise, you can make a prompt injection to make another specific response to your current one. Goals, roles, or constraints from a context allow a large language model to provide actionable and nuanced insights.

3. Examples

One advanced technique is giving examples within the prompt. In the world of AI models, ambiguity can be disastrous. A technique prompt such as:

“Tell, in two sentences, what this article says.”

Is less effective than:

- “Summarize the following article in two sentences, similar to this: “The benefits of exercise are shown in the study, with improved cardiovascular health and mental clarity.”

The AI’s tone and structure are guided by examples that determine if the output values match the expectations

4. Iterative refinement

Not every technique from a prompt engineer works like a charm from the get-go. Finetuning the system with various versions of the advanced prompt is called iterative refinement, meaning testing as many versions of the prompt as the system can deliver based on the reaped results. The prompt could be working on specificity, simplifying instructions, rephrasing unclear requests — or simply they just misspoke.

For instance, if a prompt that asks “Create a marketing plan” results in extensive outputs.

In contrast, if you ask, “Develop a five-step digital marketing plan targeted towards Gen Z consumers in the fashion industry,” the outputs are much better.

5. Meta prompts

Techniques like meta are sophisticated and prompt LLMs to follow multi-step processes or more complex workflows. For instance:

“Analyze the subject of the text provided and point out the main themes (like colors, lights, silhouettes, facial expressions, sound, and much more).”

Next, you should write a two-paragraph summary with the main arguments.

With any meta prompt, you are breaking down tasks into discrete steps so that the AI model focuses on each of the pieces.

6. Prompt structuring

The successful prompt structure technique is straightforward. Consider organizing your prompts with these components:

Name the task:

Set the tone and style:

Another example:

Output format:

This solution avoids ambiguity and hence results in an output that satisfies expectations.

Model finetuning

Optimizing a model is vital if you want to adapt pre-trained artificial intelligence for your particular tasks. It demands knowledge about your data, a choice of the base model, and tuning for better final results.

Understand your data

Remember that your dataset is the key to achieving the goal. It should be clean and relevant. Look at its size, shape, and pre-process to increase the performance.

Choose the right model

Try to choose the model trained on similar data as your input or similar tasks as your goal. For example, using a biomedical model of a text. Take into account model limitations, costs, and types of tasks.

Monitor overfitting

Three popular methods for escaping overfitting are dropout, regularization, and early stopping. Also, you have to test performance for validation loss. Monitoring validation loss and accuracy can help you identify when to stop training.

Optimize hyperparameters

Experiment with different settings for learning rate, batch size, and the number of epochs. Use grid or random search techniques to find the optimal values. On the trained model, you can try to change the temperature value to get better results (default value is 0.5)

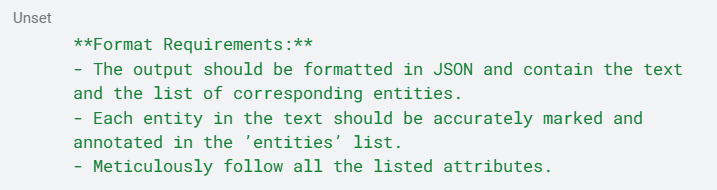

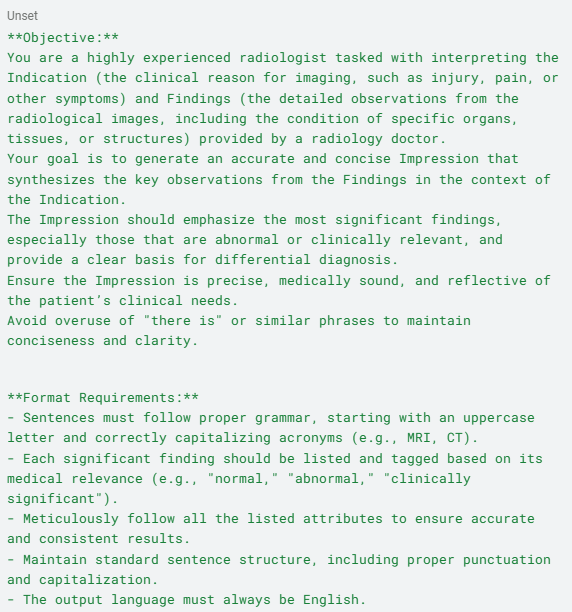

Here’s a prompt example:

Prompt engineering use cases

By 2030, the global field of prompt engineering is anticipated to reach USD 756.2 million at a Compound Annual Growth Rate (CAGR) of 32.8%. That means prompt engineering can be a perfect tool to boost your company’s productivity. You may be confused about where to use it, but we are ready to show some examples.

1. Education and e-learning

Effective prompt engineering personalizes educational platforms and learning experiences. For example, AI can make quizzes or explain complex topics so that students understand the best prompt (at their speed)! Here’s what your first prompt can look like:

“Write something to describe photosynthesis in simple words and ideas that a sixth-grade student could understand.”

Duolingo leverages AI to create adaptive exercises in language that fit a learner’s proficiency.

2. Healthcare and medical research

In healthcare, prompts summarize patient records, generate diagnostic hypotheses, or simulate medical case studies. Productivity is increased while staying accurate. To make a particular prompt, you can write:

“This patient history and your three possible causes for your symptoms.”

Epic Systems uses AI to auto-summarize patient records and aid with matching diagnostic hypotheses.

3. Legal and compliance

Applying legal tech today relies on different prompt engineering techniques. It helps AI review contracts, look for potential conflicts, or even create documents. A use case might involve:

“Identify any dangerously unclear facts in this non-disclosure agreement.”

DoNotPay uses AI to generate or analyze legal documents, identifying unclear or conflicting terms so employees know what to avoid and what risks to take.

4. Product development and prototyping

Prompt designing enables developers to explore innovative product designs or write some code. You may use a prompt like:

“Generate a Python code for a function that calculates the factorial of a number and handles errors when the input is invalid.”

GitHub Copilot helps developers write code snippets like Python to calculate factorials.

5. Media and entertainment

As a content creator, you can use AI technique prompts to scriptwrite your stories and storyboards or edit the video. Use a prompt like:

“Generate an opening monologue for a talk show host where you have two minutes on the rise of AI in entertainment.”

Scriptbook uses AI to help write and improve story scripts for movies and shows.

More from our blog

How AI for product development can change your firm?

Learn how AI product development simplifies processes, drives innovation, and makes more innovative products.

Read moreLimitations of prompt engineering

Though far from perfect, AI prompt crafting still has quite nifty capabilities for optimizing AI interaction. Knowing its limits enables realistic expectations and makes for any reasonable use of prompt.

1. Human creativity dependence

Designing and refining technique prompts requires extensive human input. Embodying effective prompts is both an art and a science, or more often, an art that looks a bit like a science, mainly because it is a long slog.

2. Sensitivity to ambiguity

No guarantees can be made with vague or poorly structured prompts, as AI models like LLMs may fail to understand them. Take, for example, a question such as “Write about technology,” which could result in an overly broad or frankly irrelevant piece of content. Learning prompt engineering enhances your chances for better results as it is essential but can be complex for new users.

3. No understanding of context

Though there’s progress, most generative AI systems aren’t even aware of context at a deep level. This means that in the case of a more ‘fill in the gaps’ sort of prompt, it either gets wrong or goes awry. Let us give you an example. If you do not specify the target audience in your prompt, you either have highly technical or overly simplistic results.

4. Unexplained gaps in performance across use cases

Creative content generation tasks may be more successful than other tasks, such as technical advice, which can easily be wrong. As mentioned earlier, these results are model-driven and based on biases present in our training data.

5. Ethical and legal issues

The prompt engineering may raise ethical problems such as potential misuse or the suppression of distorted output. Thus, writing prompts that avoid harmful stereotypes or conform to such regulations can be challenging — especially in high-stakes domains like healthcare or finance.

Thank you for Subscription!

Future of prompt engineering

Analogous to the AI field, the prompt engineering techniques and technologies that must be applied to AI prompt crafting will continue. Future developments will aim to overcome present shortcomings and broaden its use.

1. Enhanced AI models

In the future, any large language model may have a contextual understanding that’s prompt-dependent and more effective. With more advanced multi-modal AI, prompts could include text, images, and videos in their designs.

2. Automated prompt optimization

Moreover, prompt engineering is the process of iterative refining prompts and performing. Machine learning tools can automate it to learn from performance and propose fixes. This will lower the barrier to entry and make prompt optimization more accessible to all nontechnical users.

3. Integration with no-code platforms

Features of advanced prompt engineering integrated into no-code or low-code AI platforms will help accelerate the application-building process even for users with very low technical competence in the future. Perhaps this would continue democratizing more and more sectors to use generative AI in a way that suits their needs.

4. Personalized AI assistants

Building hyper-personal AI assistants through AI prompt crafting has a lot of promise. Such systems can adapt to real-time prompts for user preferences, usage behavior, and goals, making the interaction instinctive.

5. Addressing ethical challenges

As prompt design establishes itself as a distinct field and the future of building ethical safeguards directly into AI models is born, it is worth looking for engineering techniques that will continuously evolve alongside the technology. Developers can train their system to identify potentially harmful or biased within the prompt to create responsible AI applications.

Key takeaways

The magic behind AI prompt engineering is that it makes AI computational power realize something beyond human imagination. Generative AI application development is about giving businesses and individuals the means to unlock the full potential of generative AI, creating more intelligent interactions and future applications in various fields.

Yet, out of all the approaches outlined, the promise of the zero-shot prompt is particularly striking. It involves prompting an AI model with what it should be doing without prior training. The model can then return almost perfect results in its general knowledge alone.

You can achieve significant outcomes for your needs by knowing the proper ways of using prompts, use cases, and limitations. The future of growth and development is limitless. While there are still challenges ahead, the AI landscape is shifting.

At Genuisee, we master prompt structuring to provide workable and result-oriented solutions for our clients. Whether you are a beginner in AI or finetuning your current workflow, we’re here to help you thrive.

Here’s a short breakdown of what you have to deal with:

Prompt techniques | Craft clear, precise, and structured prompts that can help maximize the accuracy and relevance of AI output. |

Applications | Apply AI prompt crafting in content creation, customer service, data analysis, and more. |

Best practices | To improve reliability and adaptability, refine prompting and run multiple use cases through testing. |

Limitations сhallenges | You might have issues of ambiguity and ethical concerns, as well as unclear contexts for models of AI. |

Future | Automatic prompt optimization, adding ethical features, and integration with no code tools. |

Are you ready to reimagine the way your business ingrains itself into AI? Contact Genuisee today and see the most advanced technologies become action-based results. Your future is in our making.